Table of Contents

What are the best XGboost GPU Hosting

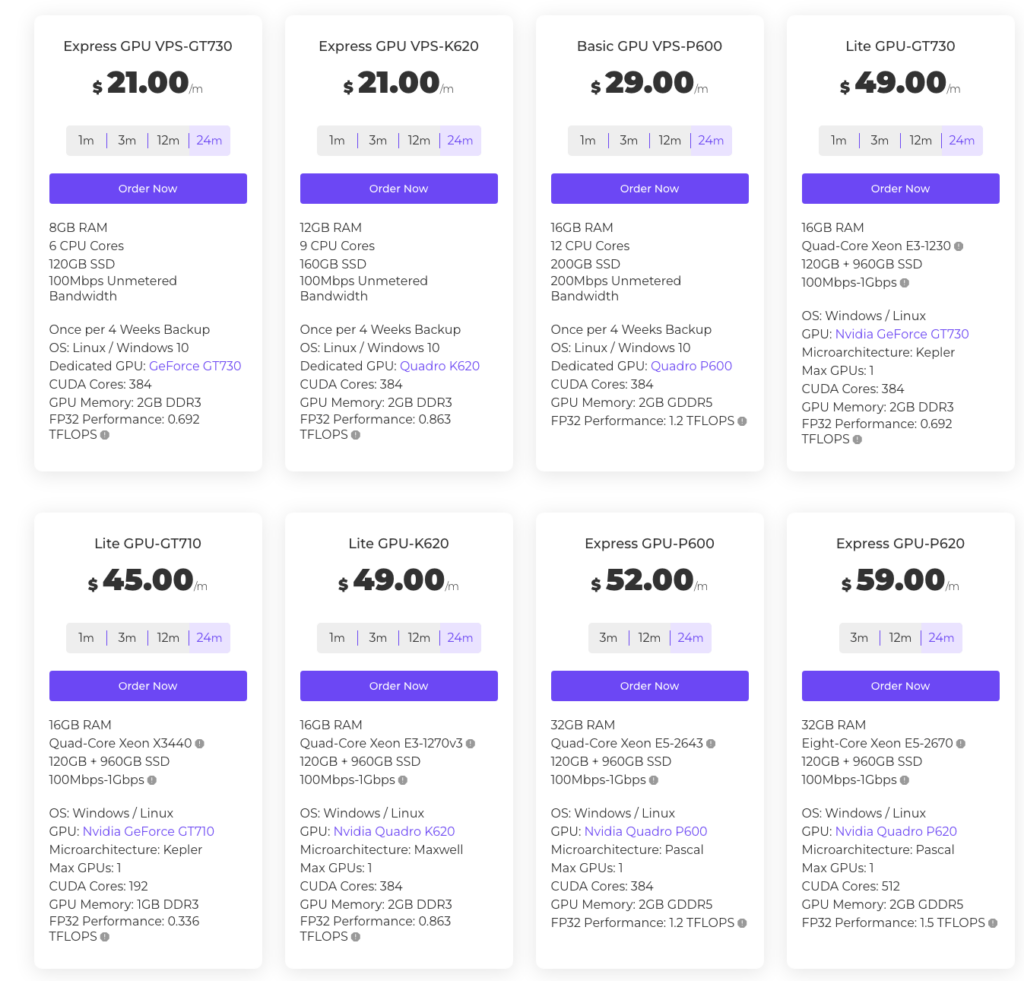

1. GPUMart

- Wide selection of GPU models, including NVIDIA A100 and RTX 4090

- Supports XGBoost and other machine learning frameworks

- Flexible configurations for various applications

- Starting at $21.00/month

Pros

- Affordable entry point

- Excellent customer support

- Flexible configurations

Cons

- Potential high network latency

- Complex hardware maintenance

GPUMart is a great choice for those looking for flexible GPU hosting options with support for high-performance models like the NVIDIA A100 and RTX 4090. It’s particularly well-suited for machine learning tasks, including XGBoost, at an affordable entry price of $21/month.

2. Amazon Web Services (AWS)

- Extensive range of GPU instances, including the p4d.24xlarge with 8xA100 GPUs

- Strong integration with AWS services

- Ideal for XGBoost and other machine learning tasks

- Pay-as-you-go pricing (e.g., $30.72/hour for p4d.24xlarge)

Pros

- Robust infrastructure

- Highly scalable solutions

- Supports spot instances for cost savings

Cons

- Complex pricing and configuration

- Overwhelming for beginners

Amazon Web Services (AWS) provides a powerful infrastructure for machine learning with GPUs like the NVIDIA A100. Its pay-as-you-go pricing model and extensive scalability make it an excellent choice for larger projects using XGBoost, though beginners may find the complexity challenging.

3. Google Cloud Platform (GCP)

- Offers NVIDIA GPUs, including A100 and T4

- Pre-built machine learning tools

- Strong integration with other Google services

- Hourly or monthly pricing based on GPU selection

Pros

- User-friendly interface

- Great integration with Google services

Cons

- Pricing can be high depending on usage

- Configuration complexity

Google Cloud Platform (GCP) is known for its user-friendly interface and integration with Google services, making it a popular choice for developers working on machine learning frameworks like XGBoost. However, its pricing can quickly escalate based on usage.

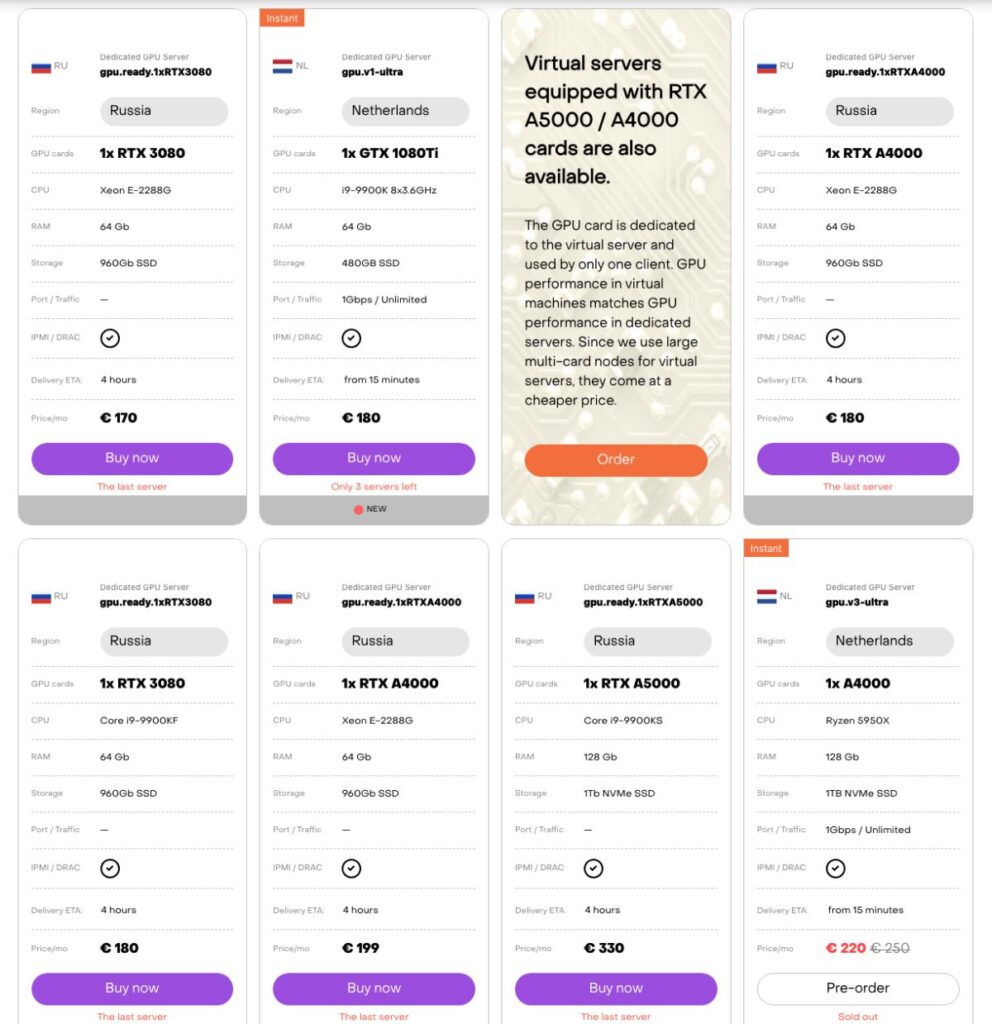

4. Cherry Servers

- Dedicated GPU servers optimized for high-performance tasks

- Supports XGBoost and other computational applications

- Flexible server configurations

- Starting at $81/month

Pros

- Competitive pricing with no hidden costs

- Excellent customer support

- Flexible server configurations

Cons

- Limited SMTP services

- Some customer support issues reported

Cherry Servers provides dedicated GPU hosting at competitive prices, with flexible configurations and support for XGBoost. While it offers excellent customer support, some users have reported limited SMTP services and occasional customer service issues.

5. Hostkey

- Dedicated servers with professional GPU cards like RTX A4000/A5000

- Designed for machine learning tasks, including XGBoost

- Diverse server options for different workloads

- Starting at €70/month

Pros

- Reliable performance

- Efficient handling of DDoS attacks

- Diverse server options

Cons

- Pricing can vary significantly

- Complex configurations may be costly

Hostkey provides powerful dedicated GPU servers with models like RTX A4000 and A5000, ideal for machine learning tasks such as XGBoost. While it offers reliable performance, pricing can vary significantly depending on specific configurations.

FAQs

1. What is XGBoost?

XGBoost (Extreme Gradient Boosting) is an advanced implementation of the gradient boosting algorithm designed for performance and speed. It improves the accuracy and efficiency of machine learning models, particularly for supervised learning tasks like regression, classification, and ranking.

2. How does XGBoost differ from other gradient boosting algorithms?

XGBoost stands out because of its optimizations for faster execution, regularization techniques to prevent overfitting, support for distributed computing, and an ability to handle missing data. It also supports tree pruning, reducing computational costs and improving model accuracy.

3. What are the main advantages of using XGBoost?

- Speed and Performance: Optimized for fast computation, XGBoost can handle large datasets efficiently.

- Accuracy: Its regularization techniques reduce overfitting and improve prediction accuracy.

- Customizability: The algorithm allows fine-tuning of hyperparameters, offering more control over model performance.

- Handling Missing Values: XGBoost can handle missing data efficiently without needing extensive preprocessing.

4. What is XGBoost GPU support?

XGBoost has built-in support for running on GPUs (Graphics Processing Units), which can drastically speed up training times for large datasets. By using the computational power of GPUs, tasks like gradient calculation and tree construction can be performed faster compared to CPUs, especially when dealing with large-scale datasets.

5. What types of GPUs can be used with XGBoost?

XGBoost supports CUDA-enabled NVIDIA GPUs. For optimal performance, it’s recommended to use GPUs with larger memory and higher processing capabilities, such as NVIDIA Tesla or RTX series cards.

6. How do I enable GPU support in XGBoost?

To use XGBoost with GPU, you need to install XGBoost with GPU support (either via pip or from source) and specify the tree_method parameter as "gpu_hist" in your model training. Here’s a sample implementation in Python:

pythonCopy codeimport xgboost as xgb

params = {

'tree_method': 'gpu_hist', # Use GPU for training

'objective': 'binary:logistic', # Example task

}

dtrain = xgb.DMatrix(X_train, label=y_train)

bst = xgb.train(params, dtrain, num_boost_round=100)

7. What are the benefits of hosting XGBoost on GPUs?

- Faster Training: GPUs can parallelize operations, reducing training time for complex models with large datasets.

- Cost Efficiency: Using fewer but more powerful GPU instances may reduce overall costs compared to using multiple CPU instances.

- Scalability: GPU-accelerated XGBoost allows for efficient scaling in cloud environments, handling larger data sizes and complex models with ease.

8. What are the limitations of using GPUs with XGBoost?

While GPUs offer speed advantages, they also have some limitations:

- Memory Constraints: GPUs typically have less memory compared to CPUs, which can be a limitation for extremely large datasets.

- Cost: High-performance GPUs can be expensive, especially for long-running tasks.

- Compatibility: Not all hardware supports GPU acceleration, and using GPUs requires having CUDA-enabled hardware and drivers properly set up.

9. Can I use XGBoost GPU support on cloud platforms like AWS, GCP, or Azure?

Yes, XGBoost can be used with GPU support on cloud platforms such as AWS, Google Cloud, and Microsoft Azure. These platforms offer GPU-accelerated instances, like AWS EC2 with NVIDIA GPUs, Google Cloud’s Compute Engine, or Azure’s N-series virtual machines, which are designed for intensive computation tasks like machine learning model training.

10. How can I optimize XGBoost performance on GPUs?

To get the best performance from XGBoost on GPUs, you can:

- Use the

gpu_histtree method: This is the fastest GPU-supported algorithm in XGBoost. - Tune batch size: A larger batch size can improve performance on GPUs.

- Monitor memory usage: Ensure your dataset fits into the GPU memory, or use cloud platforms with larger GPU memory options.

- Consider multi-GPU setups: In environments with multiple GPUs, you can further parallelize operations.

11. What are common use cases for XGBoost with GPU hosting?

- Finance: Predicting credit risk, fraud detection, and stock price prediction.

- Healthcare: Disease prediction and analysis of large-scale genomic data.

- E-commerce: Customer behavior prediction, recommendation engines, and sales forecasting.

- Marketing: Ad targeting, churn prediction, and customer segmentation.

12. How does XGBoost compare to other machine learning libraries like TensorFlow or PyTorch?

XGBoost is specialized for structured/tabular data and excels in tasks like classification and regression, whereas TensorFlow and PyTorch are more commonly used for deep learning tasks involving unstructured data, such as images and text. XGBoost is generally faster for structured data, while TensorFlow and PyTorch provide greater flexibility for building custom neural networks.

13. Are there alternatives to XGBoost with GPU support?

Yes, alternatives include:

- LightGBM: A gradient boosting framework by Microsoft that also supports GPU acceleration.

- CatBoost: Another gradient boosting algorithm with GPU support, particularly efficient with categorical features.

14. How can I monitor and manage GPU usage during XGBoost training?

Most cloud providers offer GPU monitoring tools to track utilization, temperature, and memory consumption. For local machines, NVIDIA’s nvidia-smi tool can help monitor GPU activity in real-time.

15. Where can I find further documentation on using XGBoost with GPU support?

You can find official documentation and GPU-specific instructions on the XGBoost GitHub repository or the XGBoost documentation website.